Making an easy-to-use product that improves people’s lives is the core of our brand at eero. That sort of mindset also applies to how we develop software, including the process for how we write, test, and distribute code for our Android app. We’ve refined this build process from one that required an assortment of manual tasks and was prone to human error to one that improves team communication and allows us to develop more quickly.

Requirements for our build process

When thinking about improvements, our driving goals were to automate tedious, error-prone tasks and improve communication. We also wanted to catch code issues as early as possible without creating additional developer work. To make our lives easier, this build process needed to be:

- Stable — The build process always works

- Comprehensive — New code is tested; style and code analysis is run before integrating changes

- Communicative — Repetitive work that facilitates team communication is automated

Steps for an awesome build process

Our focus is on how code is integrated and shared within the team. Without interfering with workflow preferences, we try to give developers non-intrusive warnings about potential issues.

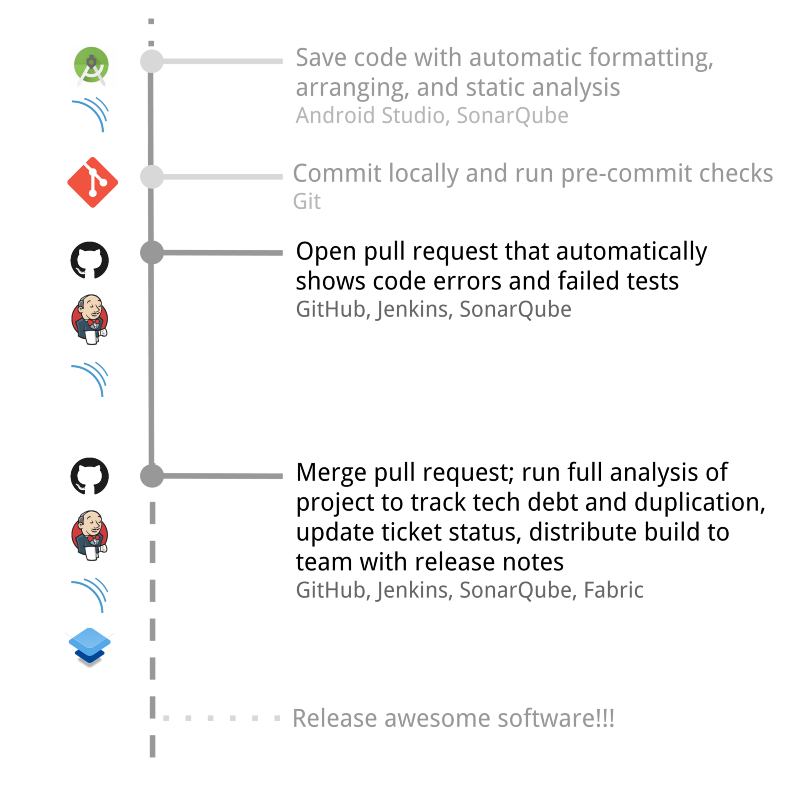

The most important parts of our build process can be divided into several steps:

- Run checks in local environment

- Run tests on Pull Requests

- Run static code analysis on Pull Requests

- Update ticket status with build number after successful build

- Distribute builds with release notes to team members

1) Run checks in local environment

Whenever we save code, a set of formatting and styling rules are applied so the code looks more consistent and we have fewer issues when integrating changes.

In Android Studio, our environment for developing eero’s Android app, potential bugs and code problems are highlighted to make the developer aware of them. This gives the developer early feedback without being intrusive or prescriptive about how to fix the problem.

2) Run tests on Pull Requests

Tests aren’t valuable unless they’re being run regularly and providing feedback. Including tests in our process also encourages a culture of writing tests and testable code.

More testing should be done automatically. It’s important to note that by “automatically” we mean that the test results are interpreted automatically as well. -Andrew Hunt, The Pragmatic Programmer

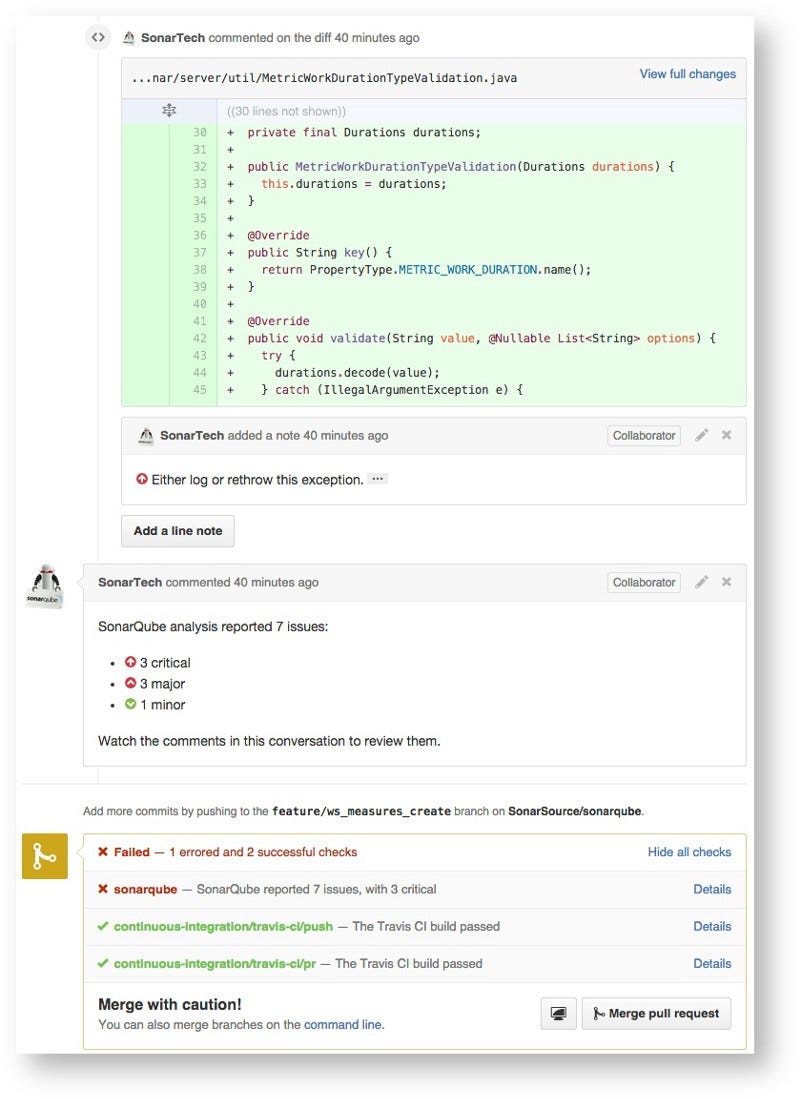

In addition to simply running tests for all pull requests, we require that all tests pass before a pull request can be merged. To enforce this requirement, we enabled protected branches and status checks on Github, thereby requiring that all integrated code is tested and has passed code review. This step is great to make sure the build isn’t broken and code changes haven’t regressed a unit test.

3) Run code analysis on Pull Requests

On a small team, asking for a pull request review is like asking a friend to proofread your blog post. That friend is happy to give feedback, but she might not want to search for all the spelling and grammar errors, too. To maintain friendships, and take some of the tedium and pressure out of code reviews, we run code analysis on all pull requests, which is like running spelling and grammar checks on a text document.

4) Update ticket status with build number after successful build

Updating tickets can be a tedious, error-prone part of the development cycle. To improve this process, we automatically progress tickets to their “Ready for QA” state and include a comment with the build number where they are fixed.

The JIRA Plugin for Jenkins provides exactly this functionality. This plugin improves everyone’s life by keeping tickets up-to-date and tagging them with build fix numbers.

5) Distribute builds with release notes to team members

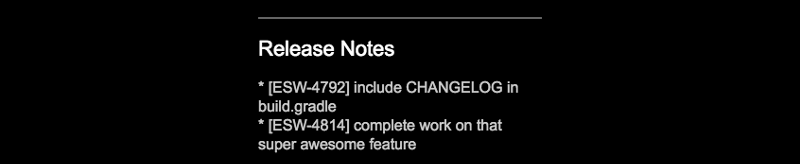

At eero, we use Fabric’s Beta service to share test builds with the team. One nice feature of the Beta service is that it allows uploading release notes with new builds.

We take advantage of this feature so that whenever emails are sent from Fabric Beta, a section called “Release Notes,” which outlines the changes since the last build, is included.

What’s next?

We have a lot of ideas on how to continue to improve this process, but we’ll only include them if they hit our bar for quality and ease-of-use. Some things on our mind are:

- Automatically optimizing images to reduce app size

- Running an automated suite of tests on devices for even better test coverage

- Tracking performance metrics, including frame rate and boot time

Is it all worth it?

We think so. Automation has improved team communication, saved time, and allowed us to write better code. And, at the end of the day, this allows us to develop features more quickly and maintain the high quality bar that our customers expect. Want to help us continue to improve this process? eero is hiring.

You must be logged in to post a comment.